The promise of a true private cloud is that it should not matter where you place your most demanding workloads.

True private clouds are those in which performance is consistently fast and efficient, regardless of whether an enterprise’s workloads run on-premised or in public clouds. Whether you move those workloads into one or more public clouds, keep them entirely on-premises or partition them across several clouds, they should perform so well that your users see no degradation in performance.

IBM’s Red Hat acquisition kicks hybrid-cloud competition to the next level

When we look at this week’s hybrid-cloud industry news, what stands out is IBM completing its acquisition of Red Hat. As Wikibon noted when the deal was announced, it positions IBM/Red Hat effectively in the race to help enterprises implement true private clouds. Key assets that IBM/Red Hat has in that regard are IBM’s mature private and public cloud offerings, Red Hat’s OpenShift platform and a global professional services and partner ecosystem to help clients modernize and migrate their workloads to hybrid environments.

The merged IBM/Red Hat is now a prime mover in the true private cloud arena. However, Wikibon considers this acquisition old news, and not just because it was first announced nine months ago.

The IBM/Red Hat deal is not as significant a market mover as it was last fall, due to the fact that the entire cloud industry has rallied around hybrid-cloud architectures. The merged vendors now contend with hybrid and multicloud competitors on all sides. Consider that since the IBM/Red Hat deal was first announced, there have been significant hybrid-cloud announcements from Amazon Web Services Inc., Microsoft Corp.’s Azure, Google Cloud Platform, Oracle Cloud, VMware Inc., Dell Technologies Inc. and Cisco Systems Inc.

Google’s AI benchmark results signal a new performance-focused stage

Now that everybody in the cloud arena is angling for these opportunities, the industry battlefront has shifted toward convincing enterprise IT that they should move their most demanding workloads to a given provider’s hybrid-cloud platform. The hyperscale cloud providers — especially AWS, Microsoft Azure and Google Cloud Platform — should have a clear advantage in these wars, and they are the main challengers to IBM/Red Hat’s ambitions to grow their enterprise footprint in hybrid cloud.

It’s with that perspective in mind that Wikibon calls attention to another, lower-profile industry announcement that also took place this week. Specifically, Google announced that Cloud TPU v3 Pods, its latest generation of AI-optimized supercomputers, had, when deployed in Google Cloud, set some performance records in the latest round of the MLPerf benchmark competition.

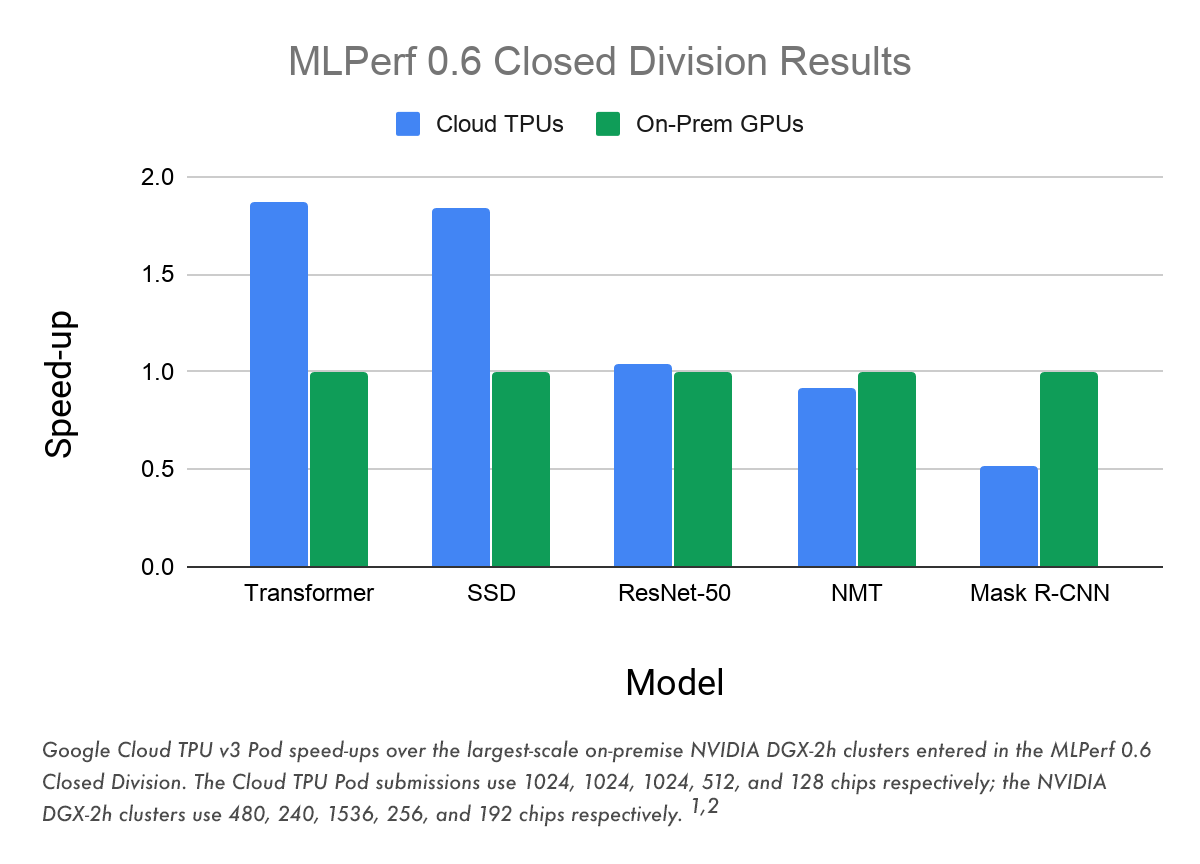

Source: Google Cloud

The MLPerf initiative, which Wikibon began to track more than a year ago, is an emerging industrywide standard for measuring the performance of training and inferencing workloads on clouds, servers, edge devices and other AI-optimized platforms. It provides benchmarks for use cases that predominate in today’s AI deployments, including computer vision, image classification, object detection, speech recognition, machine translation, recommendation, sentiment analysis and gaming.

Google’s latest announcement marked a clear inflection point in the hybrid-cloud market’s shift toward a pure horse race based on which provider can best scale up the execution of AI/ML workloads. What was most noteworthy about Google’s latest announcement was how it used their benchmark results to support its claim that Cloud TPU Pods can process specific AI training workloads better than those same workloads running on any of several rival on-premises platforms.

According to the company, “Google Cloud is the first public cloud provider to outperform on-premise systems when running large-scale, industry-standard ML training workloads of Transformer [for machine translation], SSD [single shot detector, for object recognition], and ResNet-50 [for computer vision]. In the Transformer and SSD categories, Cloud TPU v3 Pods trained models over 84% faster than the fastest on-premise systems in the MLPerf Closed Division.”

Though, as with any company-reported benchmarks, you should take these with a grain of salt, it was clear that Google intends to expand its use of benchmarks in its struggle to gain ground in the hybrid-cloud arena against AWS and Microsoft Azure. Coming so soon after its launch of the Anthos hybrid-cloud platform, it would not be surprising to see Google use the benchmarks in its hardware collaborations — with Cisco, VMware, Dell EMC, Hewlett Packard Enterprise Co., Intel Corp. and others — to build out full hybrid-cloud stacks that are optimized to these benchmarks.

Industry-standard AI benchmarks will accelerate hybrid cloud stacks

This benchmark mania will become a critically important go-to-market strategy in a segment that will only grow more commoditized over time. In this regard, Google more or less put that ambition in writing in its latest announcement, and in discussions this week with Wikibon: “We’re committed to making our AI platform — which includes the latest GPUs, Cloud TPUs, and advanced AI solutions — the best place to run machine learning workloads,” the company wrote. “Cloud TPUs will continue to grow in performance, scale, and flexibility, and we will continue to increase the breadth of our supported Cloud TPU workloads.”

In fact, the company plans to offer prepackaged Cloud TPU v3 “slices” at all scales to enable Google Cloud Platform customers to process any of the benchmarked large-scale AI/ML workloads reliably at the “right performance and price point.”

The likelihood of an AI/ML benchmarking shooting war is increased by the fact that Nvidia Corp. is also boasting of its superior results in the same categories as Google, and then some. Nvidia’s DGX SuperPod set benchmark records in training performance, including three in overall performance at scale and five on a per-accelerator basis.

Considering that Nvidia is a prime AI hardware-accelerator provider to many public and private cloud platforms, it’s highly likely that its benchmark results will figure into many hybrid-cloud solution providers’ positioning strategies.

Wikibon would not be surprised to see the converged IBM/Red Hat begin to publish the full range of MLPerf benchmarking results for workloads running on IBM Cloud, its entire PowerAI solution portfolio and under its Watson Anywhere strategy, for AI workloads running on IBM Cloud Private for Data, Watson Machine Learning and other Kubernetes-orchestrated and containerized IBM AI services running in other public, hybrid and multicloud environments, especially OpenShift.

What customers should look for

For enterprise cloud professionals, this week’s announcements — most notably, IBM’s completion of its Red Hat acquisition and Google’s publishing of its cloud’s MLPerf benchmarks — signals the start of the next phase in the era of hybrid and multiclouds.

Going forward, information technology professionals should evaluate vendors’ hybrid-cloud portfolios by the extent to which they can process machine learning and other AI workloads fast, scalably and at low cost. The MLPerf benchmarks are becoming the standard industry framework for measuring the performance of AI training and inferencing workloads on hardware, software and cloud services platforms. You should factor those into your evaluations of commercial offerings and insist that providers include their AI-optimized solutions in the benchmark competitions.

You should also recognize that this and other AI industry benchmarking frameworks are incomplete. Most of them focus on performance but skimp on measuring the efficiency, price-performance or total cost of ownership of commercial hybrid cloud platforms for handling these workloads.

Here’s what Dave Vellante and Stu Miniman had to say on theCUBE this week about the IBM/Red Hat deal’s closing and where that vendor now finds itself in the competitive fray: